Internet performance talk at IGF 2018

In this blog post I’ll share the slides I presented in a session at the 2018 Internet Governance Forum (IGF) titled Net Neutrality: “Measuring Discriminatory Practices”, along with some extra comments that occurred to me after delivering the talk, when preparing this follow-up blog post.

In my first slide, I recapped OONI’s experience with measuring performance of an emulated DASH flow in collaboration with Fight for the Future. What we did was basically reimplement Neubot’s DASH test in Measurement Kit (OONI’s measurement library) and run experiments.

After mentioning that, I went on to describe what I learned from that experience, and from reasoning more in general about measuring network-neutrality-related metrics. I started by providing an historical perspective, to then give an overview of the current landscape.

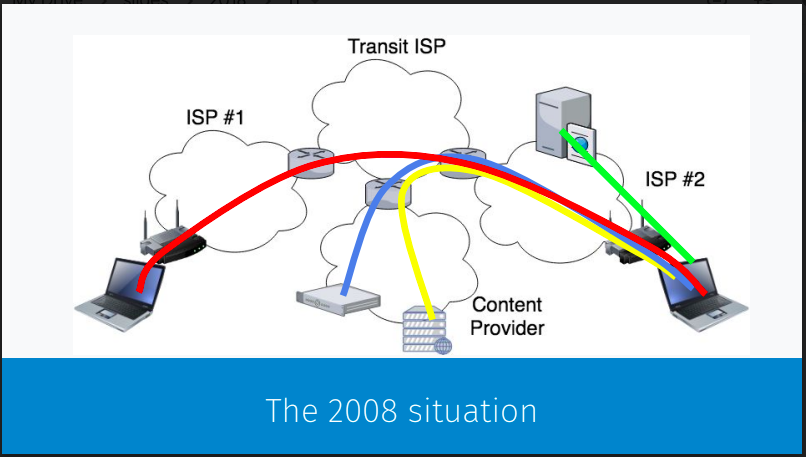

My second slide, in fact, describes the measurement scenario around the end of the previous decade, where the emergence of Measurement Lab allowed to write Internet measurement experiments that users could run from their computers towards measurement servers provided by Measurement Lab. I went on to explain how this measurement infrastructure was excellent to tackle the most pressing measurement issues at the time (i.e. the performance with which users could access Internet content and the selective throttling of specific peer-to-peer protocols and, chiefly among them, BitTorrent).

Regarding speed measurements, Measurement Lab servers are traditionally located in data centers and Internet eXchange Points (IXPs) that are close enough to Internet content. So tests like NDT and Neubot (the blue line in figure) could provide a reasonable proxy of the performance of “using the Internet” (the yellow line in figure).

While discussing this matter, I also pointed out that measurements in many cases do not take into account the fact that there is a wireless link on path (a WiFi in the picture), and that this is an additional source of complexity because of interference and other effects. (Incidentally, this problem is even more urgent today, with the surge of 2-3-4G usage.

As for measuring protocol throttling, Glasnost and other experiments relied on the fact that ISPs were discriminating against any BitTorrent-like flow, regardless of its destination. So, a server located in a well connected datacenter was functionally equivalent to a BitTorrent peer in another ISP (see the red line in figure). What’s more, since Measurement Lab servers were generally much better provisioned than random BitTorrent peers, it was quite sensible to attribute performance issues to either user’s issues or ISP’s interference. And user’s issues (e.g. WiFi issues) could be singled out by running non-BitTorrent tests towards the same server, in order to establish a performance baseline for the path.

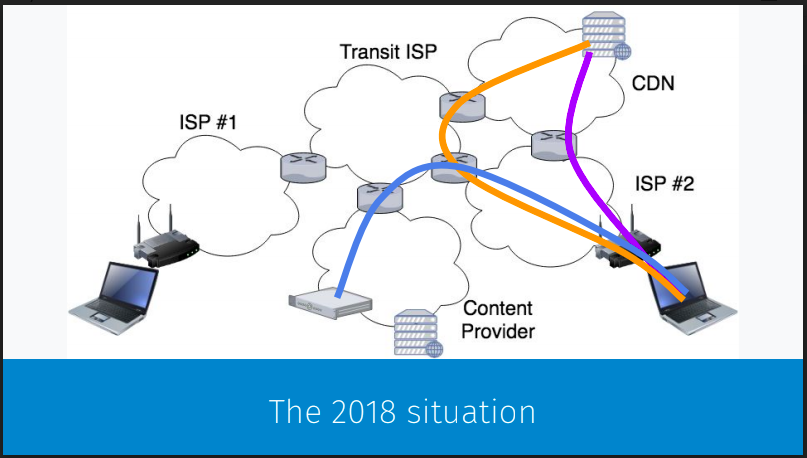

The third slide illustrates the current situation (of course, IMO). Here, I emphasize the importance of Content Delivery Networks (CDNs) because interconnection is increasingly becoming one of the most pressing network neutrality issues. Since 2008, video has been more and more widely used. So content providers and ISPs have used direct interconnections much more frequently, to reduce latency and to better control quality (i.e. losses).

In the above figure, the violet line is a direct interconnection between an ISP and a CDN. We have seen several cases where the ISP and the CDN had a peering war, where one party forced the other to take a longer and likely more noisy path (the orange path in figure). One of the first such cases to go mainstream was the tussle between Comcast and Level 3. In that case, and in many other cases, the longer route obviously also lowered the quality of the streaming, because of higher round trip times, queueing delays, and/or packet losses.

Understanding whether there is “throttling” of specific video streams has, therefore, increasingly become a hot policy issue. However, the change in the infrastructure that accompanied the expansion of already large CDNs has made the job of researchers operating with public measurement tools more complex. In many cases, it is either not possible or too expensive to deploy measurement servers near the data.

One solution to this problem is to stream real content and measure its performance. This is what Sam Knows does, according to its own documentation, targeting YouTube, Netflix, BBC iPlayer and possibly other video streaming services. In addition to that, they also perform active measurements, presumably with Measurement Lab servers, that emulate video streaming (like the above mentioned Neubot DASH test does). Albeit this methodology is not open source, it should in principle be able to detect peering issues, because it measures the actual path.

Another leading tool in this space is WeHe. This app, supported by the French regulator ARCEP, basically “replays” the packets captured during a previous streaming session (e.g. with Netflix or YouTube) using as destination server a server controlled by the researchers rather than YouTube. (In the future, WeHe should use as destination Measurement Lab servers, so you can consider the blue flow in figure as a WeHe replay flow.) This has proven to be a good approach for detecting when ISPs throttle traffic directed to a specific service based on some feature of the traffic (e.g. the SNI in the TLS HELLO packet). In many regards, WeHe’s methodology is similar to Glasnost’s methodology and it is probably the best way to use existing platforms like Measurement Lab to measure throttling of video services. However, I still fail to see how this approach could detect a peering issue like the one in figure, because the network test and video streaming may in principle use two distinct network paths.

I ended my talk mentioning that one may be tempted to conclude that having measurement servers inside CDNs is the definitive way to spot interconnection issues. Since this seems to be complex to do, I said I believe that a mixture of techniques for detecting signature based throttling (like WeHe does), interconnection issues (by measuring the service directly like Sam Knows does), and baselines (like Sam Knows and Nebot DASH do) is probably a reasonable second best solution to address the video throttling policy question.